Forbes – Waymo Runs A Red Light And The Difference Between Humans And Robots

Editors note: a Waymo ran through a red light, causing a rider of a scooter to crash. The vehicle was being remotely operated, and the operator apparently didn’t see the red traffic signal. This shows that Waymo has a backup system, but it is not safe either.

See full original article by Brad Templeton at Forbes

In January, an incident took place where a Waymo robotaxi incorrectly went through a red light due to an incorrect command from a remote operator, as reported by Waymo. A moped started coming through the intersection. The moped driver, presumably reacting to the Waymo, lost control, fell and slid, but did not hit the Waymo and there are no reports of injuries. There may have been minor damage to the moped.

This incident took place because the Waymo went through the red light after getting a command from a human remote operator who missed that there was a red light. Remote assistance had been invoked because of construction at the intersection. Once the vehicle’s systems saw the moped, it braked and any collision was avoided, but this is clearly not a desirable situation. While it probably teaches lessons about how better to manage remote operators, in the end this comes down to a human driving error rather than a robotic one. Waymo says it has taken steps to prevent this in future, which is the purpose of these pilot projects. They temporarily blocked the intersection, remapped it and gave more training to remote operators. Absent some luck, this could have become Waymo’s most serious incident, and even though the Waymo Driver system would not be directly implicated, their remote assistance design might have been.

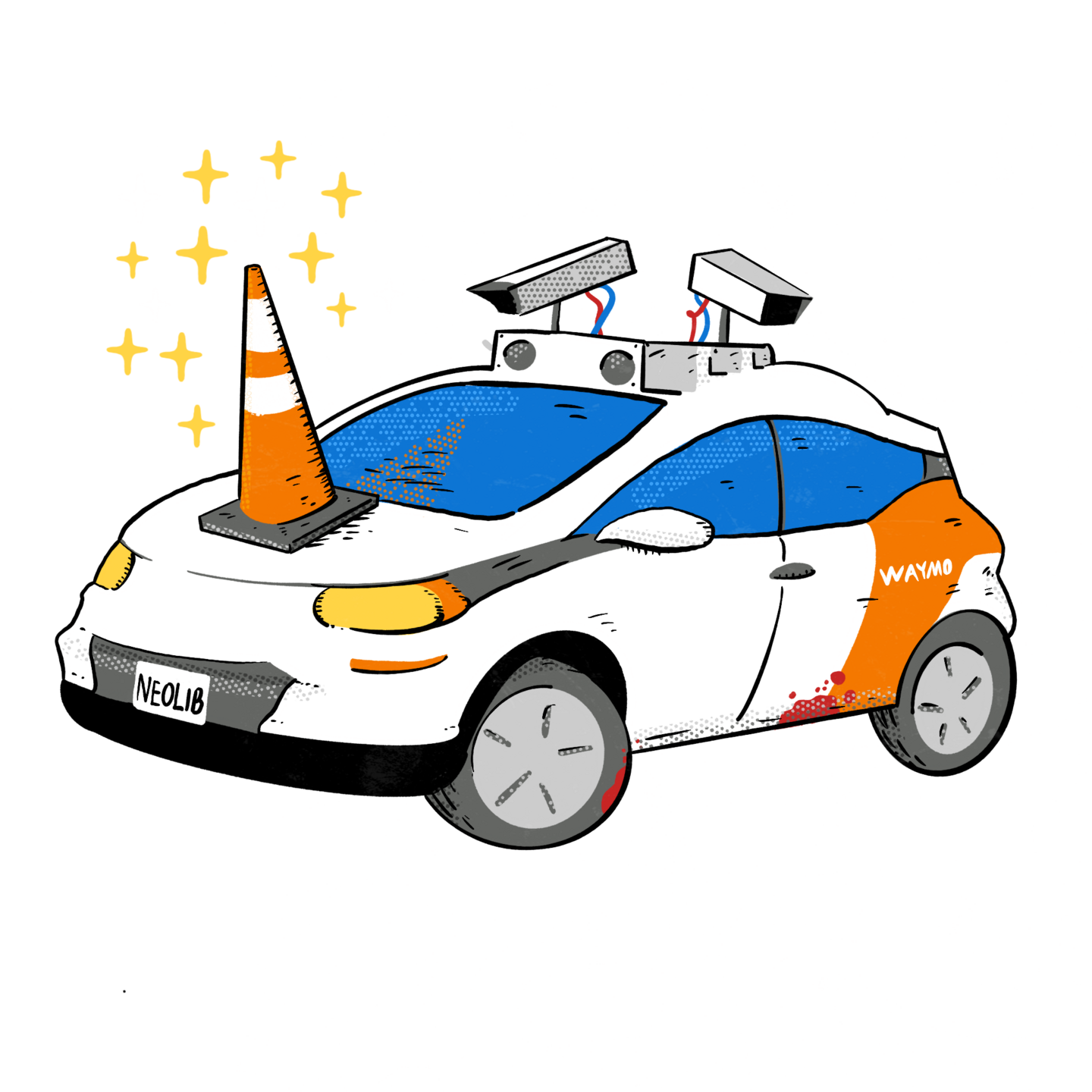

That provokes the question of how crashes caused by robots differ from those caused by humans. Very early in the world of robocars, one would see a Venn diagram like this one:

This illustrated that the goal was to get the rate of crashes caused by robocars much lower than the rate of those caused by people. At the same time, an issue arose because while some of those crashes would look just like the ones caused by humans, there would be some portion that humans just would never do—a sort of “uncanny valley” of crashes. The concern was that because people would not understand these robot-only crashes, they might fear them more than the crashes we were used to, even though there were fewer of them. That fear might drive irrational decisions, even though all think having far fewer crashes is a desirable win.

As time has gone on, I wonder if the diagram might look more like this. It may be that the intersection is small, and most robocar crashes are the frightening, inhuman kind. Even if not, what’s clear is that those inhuman crashes get the most attention.

The Waymo which went through a red light presents a familiar situation—lots of crashes are caused by human drivers that miss a red light. And in this case, it was a human who made that error. Some other crashes we’ve seen in the news have been fairly ordinary.

We’ve also seen crashes that baffle us, the most prominent being Cruise’s dragging of a pedestrian under the car. She went under the car because she was hit by another car, and that wasn’t Cruise’s fault, but we all imagine a diligent human (not that all humans are diligent) would never, after running over somebody due to external factors, decide to just drive and pull over without figuring out where she went. The Cruise car thought she had hit the side of the car and thus she wouldn’t be under it, but we hope a human would check. Of course, humans, unlike robots, often panic, and we might imagine what the Cruise car did as a sort of panic—it obeyed imperatives to pull over and not block the road after any incident.

Another recent event involved two different Waymos hitting the same pickup truck that was being towed badly in the middle left-turn lane of a street. The way the vehicle was being towed put it an an angle, sticking its nose into the Waymo’s lane. Computerized prediction systems thought this would be temporary and the vehicle would (like a normally towed vehicle) get back in line behind the tow truck, but that didn’t happen, and a small glancing blow was made. And then again a few minutes later by another Waymo thinking just the same way. Humans make bad predictions, but we’re also good at seeing when they have gone wrong and updating them, and the robot wasn’t.

When a Cruise hit the back of a bus that was braking in front of it, the internal reason was very robotic, a strange bug in how it understood an articulated (bendy) bus drives and where it’s going, though at the big picture level, humans hit big obvious targets all the time because they’re just not looking.

Cruise got punished heavily for the dragging, and the DMV said this was both because they acted unsafely (causing significant injury) and because for a while they tried to cover it up. The latter sin is probably the greater one, but the DMV has yet to say just where the “unsafe” line is, and why apparently a single incident triggered it. While Cruise should not have dragged anybody, the probability of such an event is much too rare to justify a fleet shutdown on its own. This poses the question of whether the inhumanity of the events played a role. (The DMV in the past has declined to elaborate on their rulings.)

One reason the intersection of the two circles will shrink is that teams understand human-style crashes and will work to eliminate them. Every team does heavy testing in the simulator, and they try to load their simulator with crash scenarios. This involves trying to simulate any crash they’ve heard of, including crash reports that come in from human crashes in the real world. They also try to simulate situations which might cause a robot-only crash, but they don’t have any intuition on those. Rather, they will have scenarios for any such situation that they have encountered in testing, plus ones discovered with adversarial AI testing, where an AI works to try to find things that make the car fail. This will not be as complete a set as what can be built for human-type crashes. Over time, that will shrink the intersection—essentially if a crash type is well understood, and occurs in ordinary traffic today, work in simulator will make the vehicle less likely to have it.

See full original article by Brad Templeton at Forbes