arsTechnica – Feds probe Waymo driverless cars hitting parked cars, drifting into traffic

See original article by Ashley Belanger at arsTechnica

Auto-safety regulator is investigating 22 reports of Waymo cars malfunctioning.

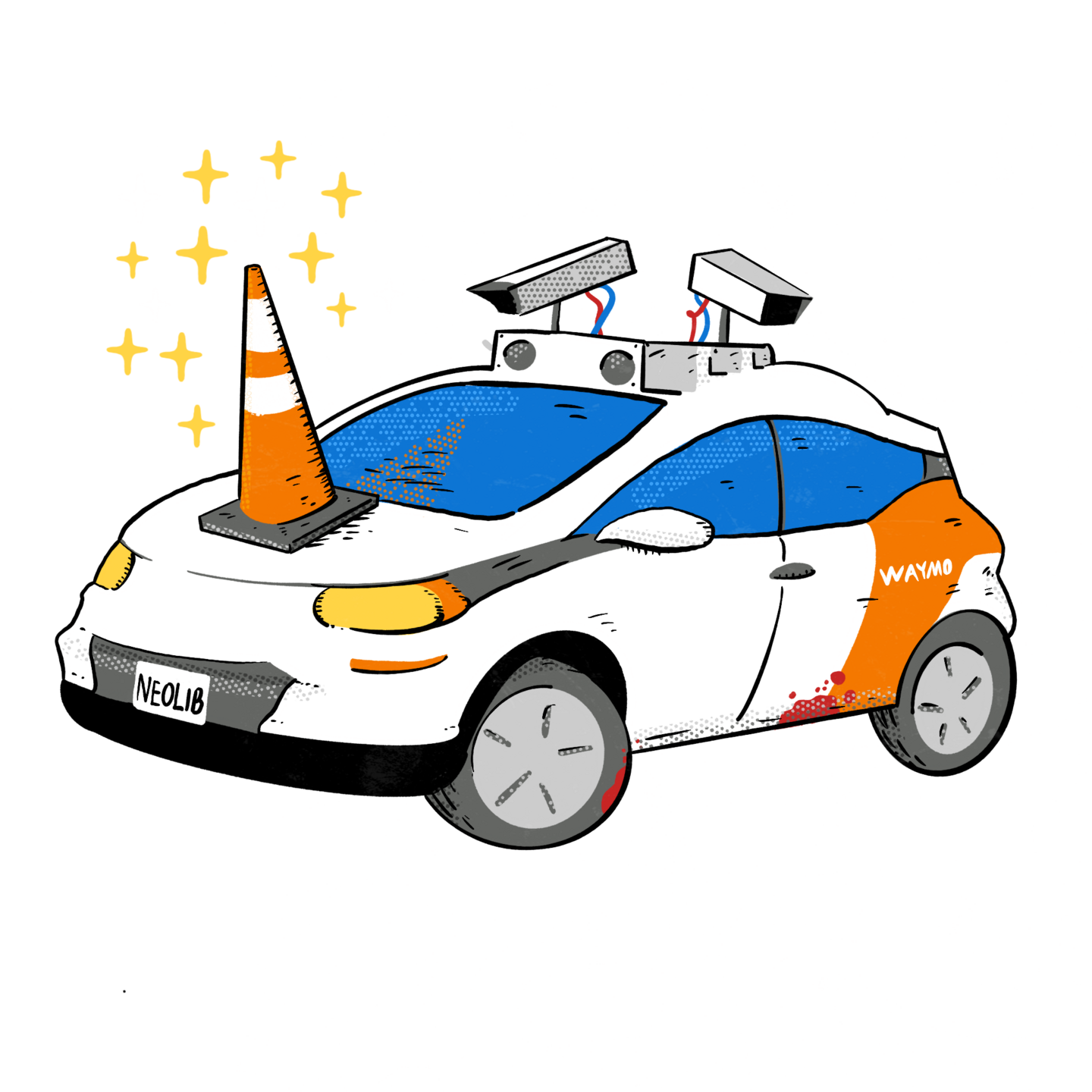

Crashing into parked cars, drifting over into oncoming traffic, intruding into construction zones—all this “unexpected behavior” from Waymo’s self-driving vehicles may be violating traffic laws, the US National Highway Traffic Safety Administration (NHTSA) said Monday.

To better understand Waymo’s potential safety risks, NHTSA’s Office of Defects Investigation (ODI) is now looking into 22 incident reports involving cars equipped with Waymo’s fifth-generation automated driving system. Seventeen incidents involved collisions, but none involved injuries.

Some of the reports came directly from Waymo, while others “were identified based on publicly available reports,” NHTSA said. The reports document single-party crashes into “stationary and semi-stationary objects such as gates and chains” as well as instances in which Waymo cars “appeared to disobey traffic safety control devices.”

The ODI plans to compare notes between incidents to decide if Waymo cars pose a safety risk or require updates to prevent malfunctioning. There is already evidence from the ODI’s initial evaluation showing that Waymo’s automated driving systems (ADS) were either “engaged throughout the incident” or abruptly “disengaged in the moments just before an incident occurred,” NHTSA said.

The probe is the first step before NHTSA can issue a potential recall, Reuters reported.

Earlier this year, Waymo voluntarily recalled more than 400 self-driving cars after back-to-back collisions in Arizona. While Waymo relies on machine learning to “interpret complex object and scene semantics” that ensure self-driving cars safely navigate roads, Waymo has said it’s mostly focused on responding to varied weather patterns or less predictable movements of emergency vehicles and is still learning how unpredictable navigating the road can be.

In a blog post, Waymo explained that a software defect caused two separate Waymo cars to drive into the same “backwards-facing pickup truck being improperly towed” in December 2023.

“We determined that due to the persistent orientation mismatch of the towed pickup truck and tow truck combination, the Waymo AV incorrectly predicted the future motion of the towed vehicle,” Waymo’s blog said.

Within 10 days, Waymo pushed a software update to fix the problem, which it described as a “rare issue” that “resulted in no injuries and minor vehicle damage.”

A Waymo spokesperson told Ars that Waymo currently serves “over 50,000 weekly trips for our riders in some of the most challenging and complex environments.” Any time there is a collision, Waymo reviews each case and continually updates the ADS software to enhance performance.

“We are proud of our performance and safety record over tens of millions of autonomous miles driven, as well as our demonstrated commitment to safety transparency,” Waymo’s spokesperson said, confirming that Waymo would “continue to work” with the ODI to enhance ADS safety.

While Waymo cars failing to recognize an unusual object on the road may be somewhat expected on what Waymo described as its “rapid learning curve,” keeping cars out of construction zones has seemingly consistently posed a challenge despite being a “key focus” for Alphabet’s self-driving car company.

In a blog post last summer, Waymo said that because it’s expanding into “some of the largest and densest cities in the US,” with the “sheer amount of construction taking place,” Waymo cars are now driving “around active construction zones day and night.”

Waymo said that although its cars have gotten better at “responding to handheld signs, maneuvering around construction cones that come in all colors and sizes, and changing lanes for road work ahead even if the proper signage is missing,” its “work is not done.”

The ODI’s probe may require an update to specifically make construction zones safer, as Waymo cars suddenly entering a site could risk injuries or even fatalities. In recent years, more than 800 people have died annually from work zone fatal traffic crashes, the Federal Highway Administration reported.

While other self-driving car companies have made headlines for shocking accidents, including two nighttime fatal crashes involving Ford BlueCruise vehicles and a Cruise robotaxi dragging a pedestrian 20 feet, Waymo has boasted about its safety record.

In December, Waymo safety data—based on 7.1 million miles of driverless operations—showed that human drivers are four to seven times more likely to cause injuries than Waymo cars.

NHTSA appears to be closely monitoring all self-driving car companies, helping to trigger recalls of more than 900 Cruise vehicles and a whopping 2 million Teslas—which covered every car with Autopilot—last year. More recently, the agency began probing Amazon’s Zoox after its autonomous SUVs started unexpectedly braking, injuring a motorcyclist who couldn’t react fast enough before rear-ending the vehicles, Bloomberg reported.

Car companies have responded to heightened scrutiny by deploying software updates as red flags are raised, but even then, NHTSA is not always satisfied by fast fixes. In April, for example, the agency expressed concern that updates to Tesla’s Autopilot supposedly addressing the issue of Teslas repeatedly crashing into parked emergency responder vehicles didn’t actually make Autopilot much safer.

Waymo said that NHTSA “plays a very important role in road safety,” and cooperating with the probe would help further its “mission to become the world’s most trusted driver” as it expands its driverless operations. Most recently, Waymo has moved beyond ride-hailing services and into the food-delivery world through a partnership with Uber Eats that could be popular with people who got hooked on contactless delivery during the pandemic.

See original article by Ashley Belanger at arsTechnica