Washington Post – Major robotaxi firms face federal safety investigations after crashes

See original article by Trisha Thadani and Ian Duncan at Washington Post

A year ago, the companies seemed poised for a breakout moment. Now, the industry is struggling against setbacks and scrutiny.

SAN FRANCISCO — All three majorself-driving vehicle companies are facing federal investigations over potential flaws linked to dozens of crashes, a sign of heightened scrutiny as the fledging industry lays plans to expand nationwide.

The National Highway Traffic Safety Administration (NHTSA) announced this month that it would examine two rear-end collisions involving motorbikes and vehicles operated by Amazon’s Zoox, which is already testing cars without steering wheels or brakes for passengers to operate.

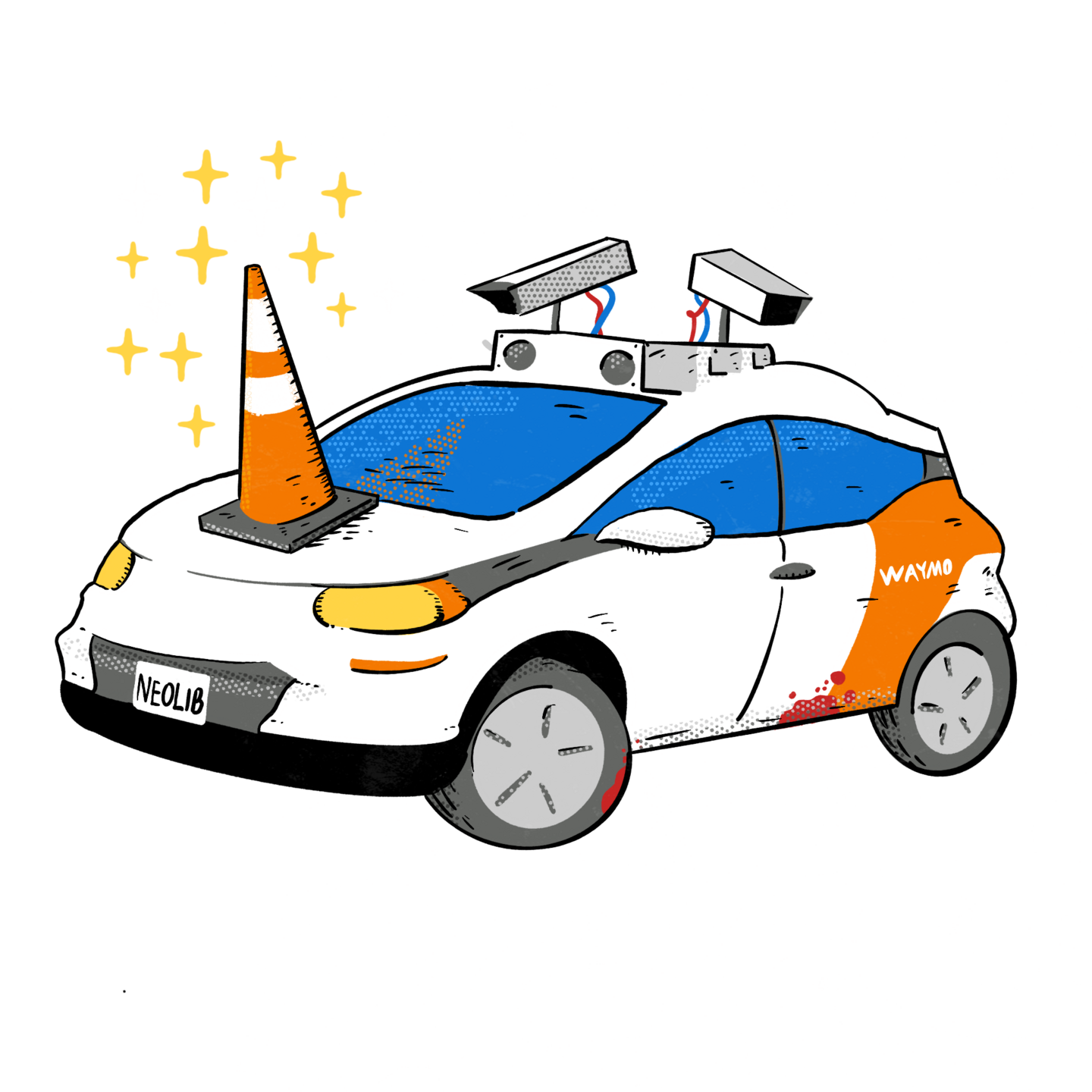

A few days later, the agency opened a probe into robotaxis operated by Waymo, owned by Google parent Alphabet, over 31 incidents that included ramming into a closing gate and driving on the wrong side of the road. A federal investigation into GM’sCruise — launched last year after one of its cars hit and dragged a jaywalking pedestrian in San Francisco — remains open, even as the company began a return to public roads this month in Phoenix.

The industry is poised for growth: About 40 companies have permits to test autonomous vehicles in California alone. The companies have drawn billions of dollars in investment, and supporters say they could revolutionize how Americans travel. But robotic cars are still very much in their infancy, and while the bulk of the collisions flagged by NHTSA are relatively minor, they call into question the companies’ boasts of being far safer than human drivers.

“The era of unrealistic expectations and hype is over,” said Matthew Wansley, a professor at the Cardozo School of Law in New York who specializes in emerging automotive technologies. “These companies are under a microscope, and they should be. Private companies are doing an experiment on public roads.”

Dozens of companies are testing self-driving vehicles in at least 10 states, with some offering services to paying passengers, according to the Autonomous Vehicle Industry Association. The deployments are concentrated in a handful of Western states, especially those with good weather and welcoming governors.

According to a Washington Post analysis of California data, the companies in test mode in San Franciscocollectively report millions of miles on public roads every year, along with hundreds of mostly minor collisions.

An industry association says autonomous vehicles have logged a total of 70 million miles, a figure that it compares with 293 trips to the moon and back. But it’s a tiny fraction of the almost 9 billion miles that Americans drive every day. The relatively small number of miles the vehicles have driven makes it difficult to draw broad conclusions about their safety.

Federal car regulations allow the companies to put vehicles on the road without anyone in the driver’s seat. Without federal regulation controlling what technology is allowed on the roads, NHTSA is relying on its investigations and recall power in a retroactive effort to keep the companies in check. Since 2021, the agency has required operators to report crashes, data that it has used to justify the latest probes.

Together, the three investigations opened in the past year examine more than two dozen collisions potentially linked to defective technology. The bulk of the incidents were minor and did not result in any injuries.

NHTSA declined to comment on the investigations.

Wansley said NHTSA appears to be running something like a “surveillance program.” Opening probes and then ordering a recall is a much faster process for the agency than developing new regulations, he said, which would have to go through a tangle of government bureaucracy that could take years.

“NHTSA wants to know what’s going on the ground, what’s the state of automated driving technology, what kinds of safety risks there are, what steps are companies taking,” he said.

But as the incidents pile up, the agency is under increasing pressure from lawmakers, labor unions and road-safety advocates to be more proactive, rather than resorting to investigating after things go wrong.

“Innocent people are on the roadways, and they’re not being protected as they need to be,” said Cathy Chase, the president of Advocates for Highway and Auto Safety.

The industry has responded with safety pledges and by emphasizing efforts to respect other road users.

Cruise, once a leader in the self-driving space, shook public trust in the technology last year after a collision in San Francisco. In October, a Cruise vehicle hit and rolled over a jaywalking pedestrian who was flung into its path by a hit-and-run driverand then dragged for 20 feet. The company recently reached a settlement with the victim.

The California DMV pulled Cruise’s permits after determining that the robotaxis were causing an “unreasonable risk” to public safety and that the company initially “failed to disclose” the entire series of events. Cruise suspended its driverless operations around the country. The company installed a new management team and spent months running simulations before putting its autonomous vehicles back on the road last weekin Phoenix.

Mo Elshenawy, one of Cruise’s co-presidents and its chief technology officer, said the company is raising the bar for what it considers good performance. Before, he said, the benchmark was a human ride-share driver.

Now, he said, Cruise is aiming to be a “role model driver” as it aims to win back the trust of regulators. That will mean avoiding driving clumsily or in an un-humanlike way, and driving like someone who is alert, obeying the law, and not impaired by drugs or alcohol, executives said. The company is focused on better performance in construction zones and when emergency vehicles are close by — common situations on the roads where automated vehicles and driver assistance systems have often struggled.

“When it comes to public perception, when it comes to regulatory perception, in terms of everyone’s perception, this is something that we’ve missed the mark on,” Elshenawy said.

In its latest inquiry, NHTSA took aim at Google’s Waymo — the most prominent self-driving car company that has been operating a robotaxi service in San Francisco, Los Angeles and Phoenix. The federal agency said it looked at 31 reports of incidents — at least 17 of which involved a crash or a fire — in which a Waymo automated vehicle was the sole one operated during a collision, or exhibited behavior that potentially violated traffic safety laws.

In October, a Waymo autonomous vehicleattempted to exit a parking lot in Phoenix while the gate was closing. The passenger-side sensors and side mirror made contact with the gate. Similar incidents took place in Scottsdale, Ariz., and Los Angeles in recent months, according to incident reports disclosed by NHTSA. In January, a Waymo vehicle outside of Phoenix tried backing up from a closed gate, but instead rolled over security spikes and deflated its tires.

Waymo said in a statement last week that it was proud of its safety record and would work with NHTSA as it aims to become “the world’s most trusted driver.” The company did not respond to an interview request.

For Christopher White, interim executive director of the San Francisco Bike Coalition — a prominent voice in the city that has opposed the expansion of the vehicles on the dense urban roads — the federal probe highlights how unpredictable the vehicles can be.

“Predictability is one of the most important aspects of safety on our streets,” he said. “And if a vehicleis behaving unpredictably, it may not result in a crash or a collision, but it could result in a near miss that you don’t necessarily hear about.”

Zoox says on its website that its vehicles are “designed for AI to drive, and humans to enjoy.” In launching the Zoox review, NHTSA said it received two reports of modified Toyota Highlanders with the Amazon unit’s automated-driving system braking suddenly in intersections, leading motorcyclists to crash into the backs of the SUVs. (Amazon founder Jeff Bezos owns The Washington Post.)

In one of the incidents, a Zoox vehicle was bearing right in an intersection when a motorcycle came up from behind and hit the vehicle on the passenger side, according to a company disclosure filed with NHTSA. The rider declined medical attention, according to the report, and the police were not called. The other incident took place in Nevada, where the DMV has authorized 14 operators to test automated vehicles.

In a statement last week, Zoox said it would cooperate with NHTSA’s investigation. The company declined to further discuss its rollout plans and the federal scrutiny.

With limited federal regulation, states have sought to manage deployments themselves. But officials around the country have been looking to Washington for help.

J.D. Decker, the Nevada DMV’s chief of enforcement, said that while the agency can theoretically revoke the licenses of companies with poor safety records under a 2017 law, it has limited resources to assess the performance of robotaxi firms, leaving the state mostly reliant on NHTSA to hold them accountable.

“The law was fashioned broadly to welcome new technologies,” Decker said. “The department in its regulatory capacity wasn’t necessarily given the means to monitor or inspect for safety considerations. We don’t have that capacity.”

See original article by Trisha Thadani and Ian Duncan at Washington Post