Insurance Institute for Highway Safety (IIHS) – On vehicle automation, federal regulators have some catching up to do

See original article by David Kidd at IIHS

Shopping for a new car? If it’s been a while since you’ve set foot in a dealership, you may feel as if you’ve stepped into the future. Many models on dealer lots today promise to relieve you of much of the drudgery of driving — controlling your speed and lane position and in some cases changing lanes or allowing you to take your hands off the wheel.

Hailing a ride in Phoenix? You can be picked up by a fully autonomous car with no driver, using an app on your phone to unlock the doors and tell the car where you want to go.

Unfortunately, nobody knows whether either of these technologies — the partial automation available to consumers or the driverless vehicle fleets deployed on a more limited basis — are safe. Federal regulators have struggled to stay ahead of the risks that come with innovations. Commonsense guardrails are missing, and a lack of crash-reporting requirements have left researchers like me without the robust data we need to evaluate the safety of these systems.

Last month, a group of six U.S. senators sounded the alarm about the hands-off approach to automation taken by the National Highway Traffic Safety Administration (NHTSA). In a letter to the agency, they warned that inappropriate use of partial driving automation systems, fueled in part by misleading marketing practices, is putting road users at risk. They also highlighted that highly automated vehicles — what the public often thinks of as “self-driving cars” — are being tested on public roads without having to meet any additional safety requirements or performance standards beyond those of conventional vehicles. The senators urged the agency to address these problems and require more complete reporting about the use of automation in a crash.

The missive from Capitol Hill echoes calls for NHTSA to take more decisive action that my IIHS colleagues and I have been making for years. Automation has the potential to reduce or eliminate human mistakes and enhance safety, but we have yet to find consistent evidence that existing automated systems make driving safer. What is clear is that automation can introduce new, often foreseeable, risks. Unfortunately, by not requiring complete data about crashes involving automation, NHTSA has made it harder to reduce these risks.

In their letter, Senators Edward Markey, Richard Blumenthal, Peter Welch, Elizabeth Warren, Ben Ray Lujan and Bernie Sanders pointed out that drivers are using partial driving automation systems on roads and in circumstances that the technology was not designed for and that, even when used in appropriate locations, the technology can encourage driver complacency, overconfidence and distraction.

Nobody should be surprised that drivers are misusing these systems. Back in 2016, I submitted a comment on behalf of IIHS on NHTSA’s initial Federal Automated Vehicles Policy. In it, we warned NHTSA that drivers were apt to confuse partial driving automation systems with more advanced automation, resulting in misuse and overreliance. We recommended that the agency require automakers to develop strategies to decrease the likelihood of mistakes and mitigate their costs. That includes constraining the use of partial driving automation systems to the roads and conditions they were designed for. The National Transportation Safety Board subsequently made similar recommendations to NHTSA following multiple fatal crash investigations involving Tesla vehicles equipped with Autopilot.

As the senators pointed out, one likely reason drivers are misusing these systems is advertising that exaggerates their capabilities, which NHTSA and other government agencies should take action to curtail. This, too, is not a new concern. In 2019, IIHS researchers published the results of a survey that showed a link between names like “Autopilot” and people’s misperceptions about how much of the driving the system could handle.

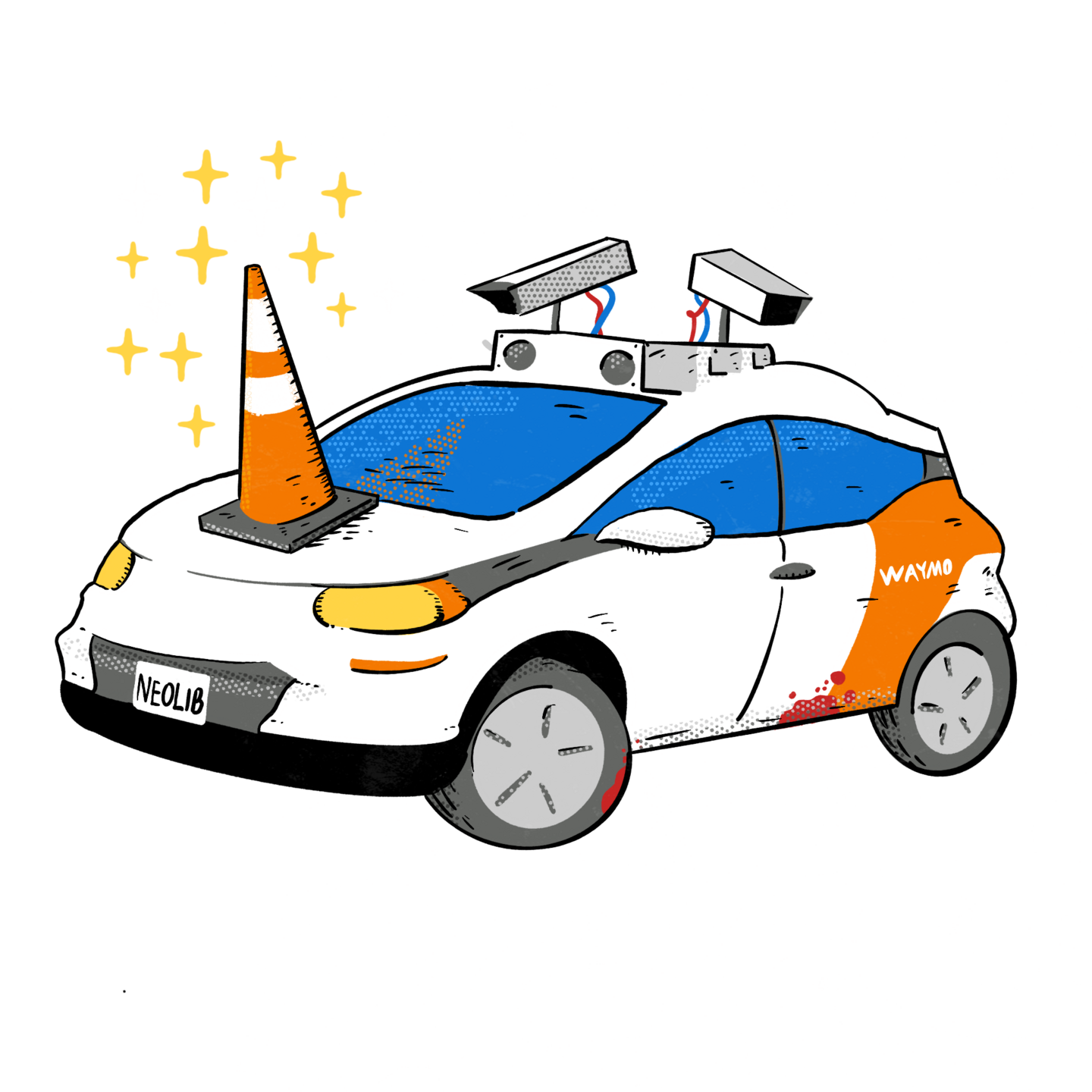

We know a lot less when it comes to more highly automated fleets. News reports, social media, and similar outlets have painted a comical and dismal picture of “robot cars” being stumped by traffic cones, driving in endless circles before picking up a fare, or getting stuck in construction zones. Unfortunately, this is also how we typically first hear of safety failures and crashes.

The issue is further complicated when automated vehicle companies are not transparent or truthful. In their letter, the senators expressed concern that Cruise withheld information from NHTSA and California officials after one of its vehicles dragged a pedestrian who had been struck by another vehicle. They also cited an investigative report last year that revealed the company was aware that its vehicles often fail to detect children. IIHS evaluates whether new passenger cars automatically apply the brakes to avoid children and adults; many new vehicles do. It is unimaginable that a company deploying highly automated vehicles would not have to demonstrate mastery of such a basic crash avoidance function.

The senators urged NHTSA to require more complete reporting about the use of automation in a crash. Researchers like me need this data to fully understand the effects of these technologies on safety. NHTSA itself needs it to guide the development of new regulations. In 2017, I drafted comments and gave testimony to NHTSA to encourage the agency to develop and maintain a database of vehicles equipped with partial driving automation systems. Such a database could be connected to crash databases or to HLDI’s insurance claim database in order to evaluate real-world safety. IIHS has used this approach to successfully document the safety benefits of driver assistance technologies like automatic emergency braking.

NHTSA has taken some steps to collect data. In 2021, the agency issued an order requiring manufacturers to report a property-damage or injury crash if the equipped automated driving system or partial driving automation system was in use within 30 seconds of the crash, or, for vehicles with partial automation, an airbag was deployed or the vehicle was towed away.

Unfortunately, the records are duplicated, unverified or incomplete and include vague or inconsistent descriptions of crash severity and damage. Some records also are redacted because they purportedly “contain confidential business information.” For example, more than 80% of crash narratives involving vehicles with partial automation were redacted for this reason, and nearly one-quarter of crashes involving highly automated vehicles cite this reason for not reporting whether the vehicle was in the operational design domain when it crashed. The database facilitates reporting to initiate defect investigations but could do more to help researchers analyze systems’ safety and performance.

Beyond this weak reporting requirement, NHTSA has yet to issue any regulations to ensure driving automation systems are safe. Instead, it has limited itself to what the senators called “after-the-fact responses” — investigating defects and recalling vehicles that already have a safety problem. Recent NHTSA probes into Tesla’s Autopilot and Ford’s BlueCruise partial driving automation features following multiple fatal crashes illustrate how the agency is struggling to coax the horse back into the barn.

Because of NHTSA’s inaction, IIHS has stepped into the role of de facto regulator. We developed and recently rolled out a ratings program that encourages manufacturers to incorporate safeguards to help ensure drivers use the technology responsibly and appropriately. But this program doesn’t address all the issues with automation. And while automakers often respond to our ratings by improving the safety of their products, unlike NHTSA, we can’t compel them to make changes.

We applaud the six senators who put NHTSA on notice about its lack of action to regulate driving automation technology and added weight to the long-standing call for action. NHTSA is charged with saving lives, preventing injuries and reducing costs from traffic crashes. It’s time for the agency to take a hard look at how automated systems are affecting those goals.

See original article by David Kidd at IIHS