AutoGuide – General Motors Wants To Stop People Being Mean To Autonomous Vehicles

Editors note: the patent is for having the vehicle handle an adversarial pedestrian by either handing off control to a qualified occupant (but who would of course be in the back seat and unable to drive!), having the car reroute to get the car out of there (which would certainly be dangerous), or have the car be tele-operated (which would be incredibly dangerous !).

See original article by Michael Accardi at AutoGuide

General Motors has been granted a patent for a system that will attempt to prevent autonomous cars from being bullied by pedestrians or human drivers.

As autonomous vehicles become increasingly integrated into urban environments—at least in California and Arizona— they face a unique and growing challenge: mean behavior from pedestrians, cyclists, and other human road users. Such behavior often hilariously exploits the safety-first programming of autonomous systems, creating situations that disrupt traffic flow and potentially compromise safety.

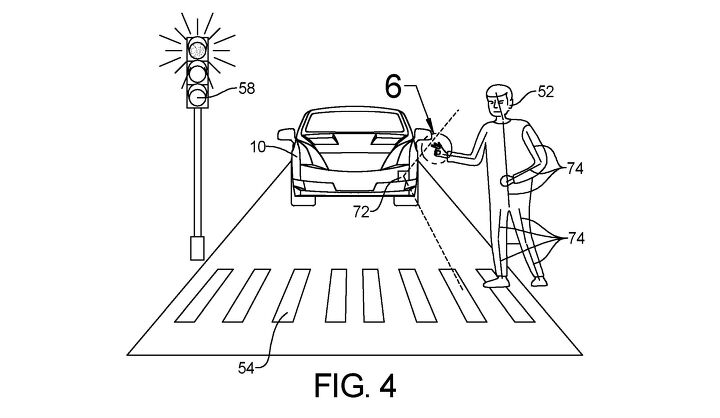

The USPTO recently published a patent application from General Motors for a system of “Responses To Vulnerable Road User’s Adversarial Behavior” or in regular speak: bullying by pedestrians.

M’s system will use sensors, cameras, and machine learning algorithms, to identify potential threats from pedestrians by analyzing movement patterns, and the person’s proximity, before evaluating the individual’s intent to interact with the autonomous vehicle.

The document lists examples of adversarial behavior including deliberate violations of right-of-way, sudden movements designed to force an autonomous vehicle to brake, tailgating by aggressive drivers, and even rude gestures or verbal provocations directed at the vehicle. The included illustrations show the “OK” hand symbol as an example of a rude gesture.

GM claims the system will be able to distinguish between a pedestrian inadvertently stepping into the road and one intentionally challenging the vehicle’s braking system. Once adversarial behavior is detected, the system enables the autonomous vehicle to respond appropriately.

Basically, once the AV decides people are being mean, it goes through a decision tree to decide which response is most appropriate due to the level of risk it faces. The choices are visual/audio warnings like honking and flashing lights attempting to scare the threat away, phoning home to a call center alertering authorities, giving up and transferring control of one of its passengers, or simply backing away and finding an alternate route.

The problem is quite topical considering the slew of complaints emerging in recent months from Waymo passengers trapped in their autonomous cabs while they’re vandalized, or in one case by a guy asking a woman for her number.

Pedestrian and autonomous vehicle interaction is a touchy subject for General Motors. In October 2023 one of its automated Cruise vehicles operating in San Francisco ran over and dragged a woman for 20 feet after she was involved in a hit-and-run with another vehicle. Cruise vehicles have recently returned to action in the Bay Area after the company halted operations following the incident.

See original article by Michael Accardi at AutoGuide