Washington Post – Crashes involving Tesla’s Full Self-Driving prompt new federal probe

Editors note: The National Highway Traffic Safety Administration is now investigating four Tesla crashes, including a fatality, where “Full Self Driving” was engaged. All crashes occurred when there were visibility issues. These suggest that Tesla only using computer vision instead of LiDAR is insufficient for autonomous driving. And this is of course extremely pertinent given that Tesla’s stock price is now based on robotaxis, which are in turn based on technology which Tesla does not have and is not pursuing.

See also NY Times – Tesla Self-Driving System Will Be Investigated by Safety Agency

See full article by Ian Duncan and Aaron Gregg at Washington Post

The National Highway Traffic Safety Administration opened an investigation into four crashes in which the system was engaged.

Federal car safety regulators are investigating reports of four crashes involving Tesla’s Full Self-Driving technology that happened on roads where visibility was limited by conditions like fog and dust, the latest probe to raise questions about the safety of the electric-car maker’s signature technology.

The most serious incident led to the death of a 71-year-old woman who had gotten out of her vehicle to help at a crash scene in November 2023, according to federal and state authorities.

The investigation marks another step in the National Highway Traffic Safety Administration’s long-running effort to scrutinize Tesla’s driver-assistance systems, which have been linked to numerous crashes and multiple deaths. It highlights ongoing questions about safety even as the company is promising to put fully autonomous vehicles on the road as early as next year, a far more complicated technological feat than assisting a human driver.

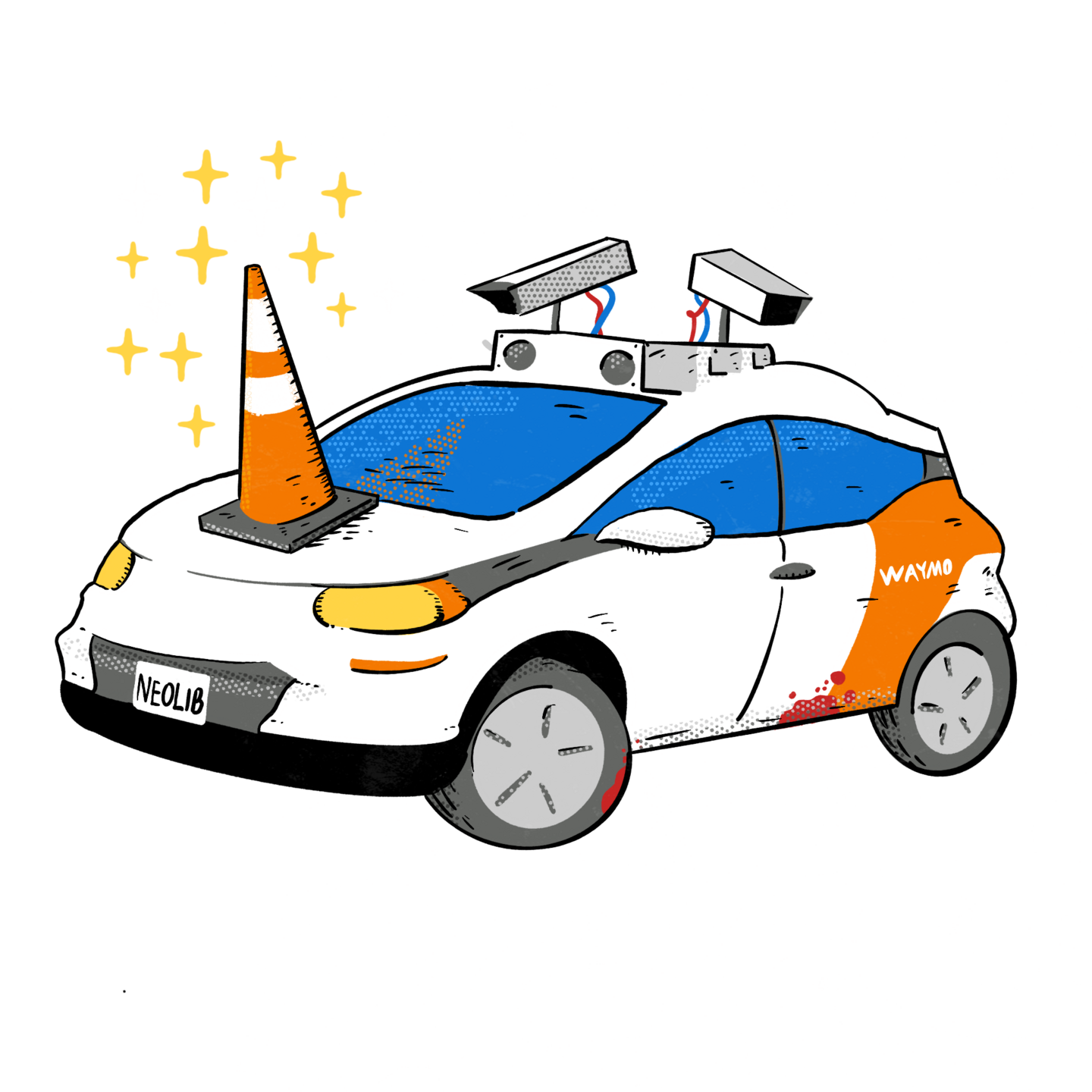

The new probe’s focus on bad visibility could also test Tesla chief executive Elon Musk’s assertion that cameras are enough to guide its vehicles without the aid of other kinds of sensors, such as radar or lasers.

Most of the federal agency’s previous work on Tesla’s software has focused on the less-sophisticated Autopilot system. The new examination targets Full Self-Driving software, which the automaker advertises as allowing vehicles to drive themselves “almost anywhere with minimal driver intervention.”

NHTSA said in a statement that the investigation would scrutinize“the system’s potential failure to detect and disengage in specific situations where it cannot adequately operate, and the extent to which it can act to reduce risk.” Tesla did not respond to a request for comment.

The fatal crash happened on Interstate 17 in Arizona, about an hour and a half north of Phoenix, according to NHTSA records and the Arizona Department of Public Safety. Two vehicles had crashed into one another, and the driver of a third vehicle stopped to provide aid. A Tesla Model Y ran into the 71-year-old woman, who had exited the third vehicle to help direct traffic. The Department of Public Safety said the sun was in the Tesla driver’s eyes. The driver did not get a ticket.

Full Self-Driving is available in most Teslas in the United States, about 2.4 million vehicles, according to regulators, though not all of their owners have opted to buy the FSD package. About half of Tesla owners were using the FSD system in the first quarter of this year, and that figure was still increasing, Musk said in April. The package costs $8,000, or $99 a month.

Neither Autopilot nor Full Self-Driving is designed to make a vehicle fully automated. Both require human drivers to remain ready to intervene. Autopilot, which comes standard on most Tesla vehicles, provides an advanced form of cruise control and can steer on well-marked roads. Full Self-Driving allows the vehicle to navigate itself, change lanes on highways and steer on city streets, and can handle stop lights.

The four reported crashes involved Tesla vehicles that entered areas where visibility on the roadway was poor, because of glare, fog or airborne dust, and Full Self-Driving was found to be active, NHTSA said. In addition to the pedestrian’s death, two of the other crashes involved an injury, according to NHTSA and the Virginia State Police. The investigation covers Tesla Models S and X from 2016 to 2024, Model 3s from 2017 through 2024, Model Y vehicles from 2020 through 2024, and Cybertruck vehicles from 2023 or 2024.

Matthew Wansley, a professor at Yeshiva University’s Cardozo School of Law in New York who specializes in emerging automotive technologies, said the new investigation could be linked to Tesla’s decision to prioritize cameras in its automated systems. Other kinds of sensors like radar and the laser-based lidar can help detect obstacles even when visibility is poor. Musk has previously said that lidar was unnecessary and that automakers who relied on it were “doomed.”

“Tesla made this decision when lidar was really expensive, and for whatever reason they seem to be sticking with it,” Wansley said. “Tesla’s position on sensors has always been a bit of an outlier, so it’s definitely worth investigation.”

Musk has said that roads are designed to be navigated by vision, relying on drivers’ eyes and brains. But Steven Shladover, a research engineer at the University of California at Berkeley, said cameras can struggle in the same kinds of fog and dust that make it hard for a human driver to see.

“If it doesn’t recognize that there’s a problem up ahead, it’s not necessarily going to do anything other than continue to drive,” Shladover said.

NHTSA has little power to review technology on vehicles before they are sold to the public, relying instead on its authority to investigate safety problems and order automakers to make fixes. The agency gathered information about the four Tesla crashes under a 2021 order that requires automakers to submit details about incidents involving advanced driver-assistance technology.

The Virginia State Police said it responded to a crash involving a Tesla Model 3 on Interstate 81 in March. The Tesla rear-ended a Ford Fusion that was having mechanical issues, driving slower than the Tesla and smoking, police said. The Tesla driver suffered minor injuries and was cited for following too closely, police said.

While Tesla tries to set out the systems’ limitations, Musk also has a record of overpromising about his vehicles’ autonomous driving capabilities.

Musk’s statements on self-driving and the company’s marketing of Autopilot and Full Self-Driving have led to multiple probes — including by the Justice Department and the Securities and Exchange Commission — into whether he and Tesla have overstated their capabilities.

In a January regulatory filing, Tesla said it “regularly” receives requests from regulators and governmental authorities. The company has said that it is cooperating with the requests and that “to our knowledge no government agency in any ongoing investigation has concluded that any wrongdoing occurred.”

Experts said the Arizona and Virginia crashes raise questions about drivers’ ability to respond when the system encounters an unusual scenario and needs them to take over, an issue that has long been a concern of safety advocates who worry that drivers are lulled into putting too much faith in the technology.

Brad Templeton, a consultant who has worked on autonomous vehicles, said Tesla’s branding doesn’t help. It refers to the system as Full Self-Driving (Supervised).

“This name definitely does confuse people,” Templeton said.

Tesla has issued recalls for Full Self-Driving at least twice in the past.In one case in 2023, it issued an update to the system to address cases of vehicles speeding and behaving unpredictably or illegally at intersections. NHTSA said Friday that the recall was linked to an earlier federal investigation into Tesla’s systems.

Several reports from The Washington Post have detailed flaws in the Autopilot and Full Self-Driving systems beginning last year. The issues included Tesla’s decision to eliminate radar, leading to an uptick in crashes and near misses involving its driver-assistance systems, along with the ability of drivers to activate Autopilot outside the conditions for which it was designed.

In one crash involving Autopilot, driver Jeremy Banner was killed when his Tesla struck a semi-truck trailer at nearly 70 mph after the truck pulled into cross-traffic on a highway where Autopilot was not intended to be used. In all, The Post identified eight fatal or serious crashes that took place in areas where Autopilot was not intended to be activated. Last December, Tesla issued a recall of nearly every vehicle it had built to address what were called “insufficient” safeguards against misuse.

But in April, NHTSA said it had opened a new probe into whether the recall worked. Just months after the recall, a Tesla Model S using Autopilot hit and killed a motorcyclist outside Seattle. NHTSA said Friday that it was “working as quickly as possible to bring this investigation to a conclusion.”

While regulators continue to examine Tesla’s driver-assistance systems, Musk is betting on fully autonomous vehicles. He unveiled a new vehicle dubbed the Cybercab at a splashy event at Warner Bros. Studios in Burbank, Calif., this month. The vehicle would not have pedals or a steering wheel, and Musk suggested it could be in production by 2026. In the meantime, he said he planned to have existing Tesla models drive fully autonomously in California and Texas next year.

But the step up from driver assistance to a fully autonomous vehicle is a big one, with the vehicle no longer able to rely on a human at the wheel as a backup.

“It’s a radically different level of complexity,” Shladover said.

See full article by Ian Duncan and Aaron Gregg at Washington Post